Kinect Fusion: Dense Surface Mapping and Tracking

Implementation of the paper “Kinect Fusion” by Newcombe et al. 1 They presented a method for accurate real-time mapping of indoor scenes, using only a low-cost depth camera and graphics hardware.

This project is part of the lecture 3D Scanning and Motion Capture (https://www.in.tum.de/cg/teaching/winter-term-2021/3d-scanning-motion-capture/). We implemented this project using C++ and CUDA.

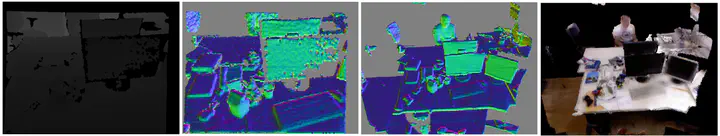

Results

For evaluating our implementation we used TUM’s RGB-D SLAM Dataset https://vision.in.tum.de/data/datasets/rgbd-dataset.

See the final part of the video above to see the results.